As the devops movement tries to reduce the gap between the software development and operations, it emphasizes on the faster and reliable delivery of the software. However it doesn’t enforce any concrete implementation details. It explains the goal where we should aim but the journey can be unique for each organization. Continuous integration is the initial part of this journey. It enables developers to continuously merge their new features and bugfixes. Typically continuous integration happens in a separate environment than the one developer uses while building and testing the software locally but it still has these exact steps and more. If we put a little more thought into designing the right tooling, we can get single interface for building and testing the software which is independent of the CI tool and works across different environments.

In this post we expand on this idea, understand what are the benefits, and how we achieved this with Jenkins for one of our clients. If you’re an experienced DevOps practitioner feel free to skip first two sections which establish some background.

Infrastructure as code

Practices such as Infrastructure as code (IaC) promote managing parts of infrastructure using the similar practices we follow while developing application software. It brings many of the benefits of best practices that are followed in the application software development process. Some examples are

Using version control

Change review and approval

Distributing packages to other people that might be solving the same problems.

Writing tests!

Once we have moved to IaC, we now are back with the problems we faced to maintain the quality of the codebase. How do we avoid duplicating code written by multiple folks in the team, how do we design the codebase to be independent of the underlying tool?

Continuous Integration

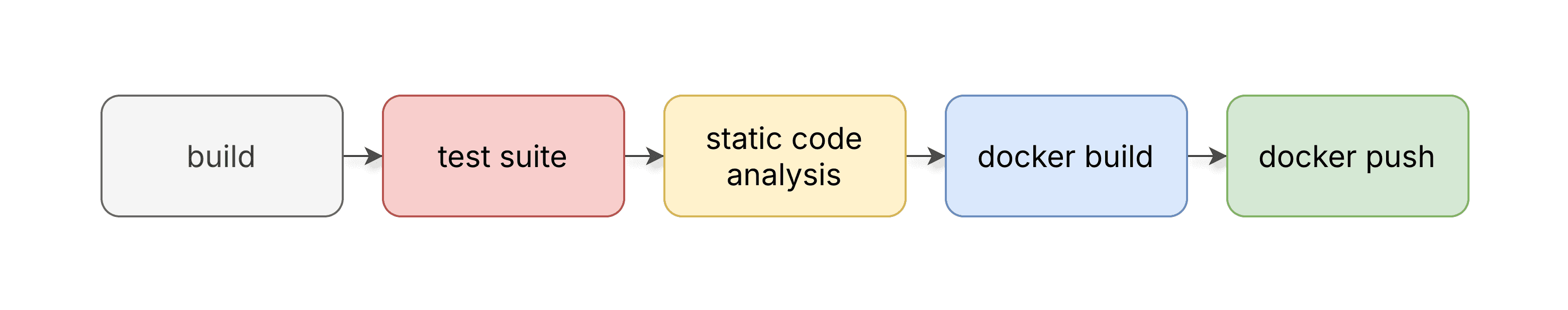

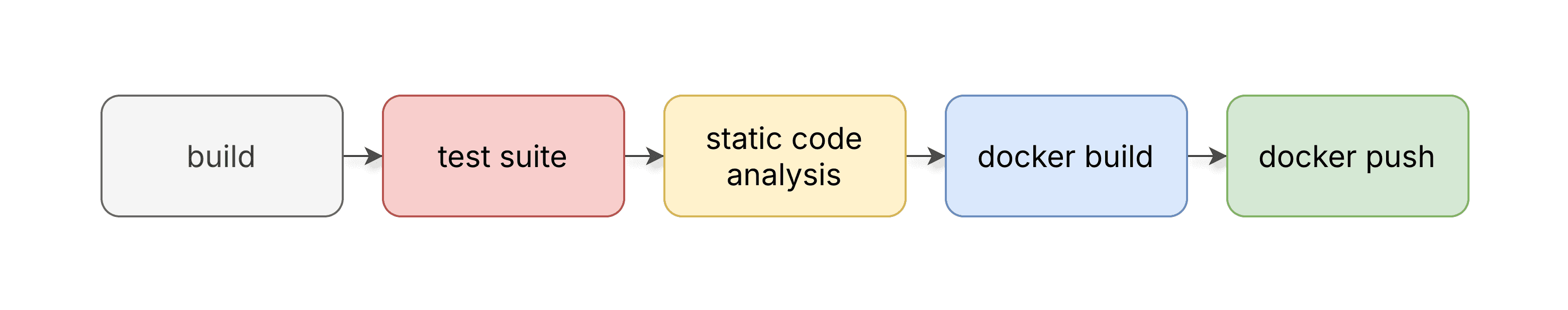

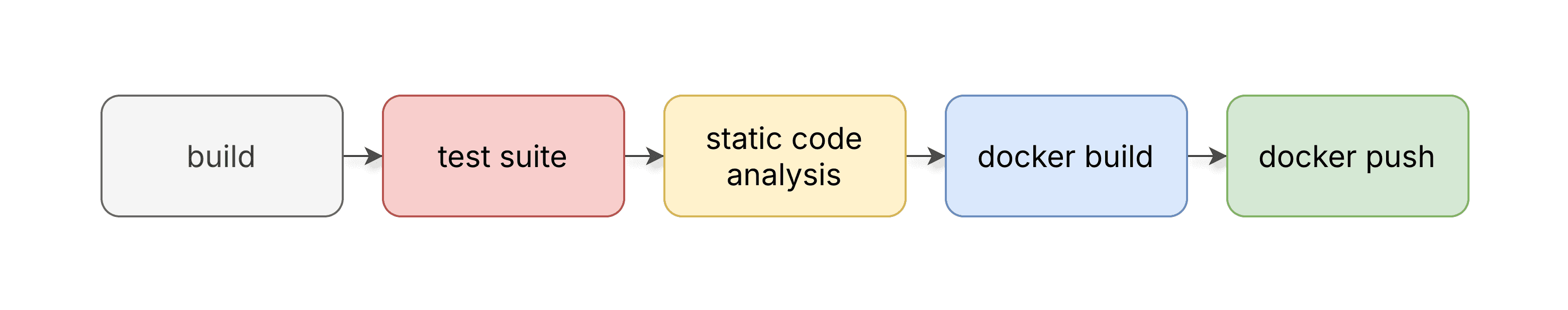

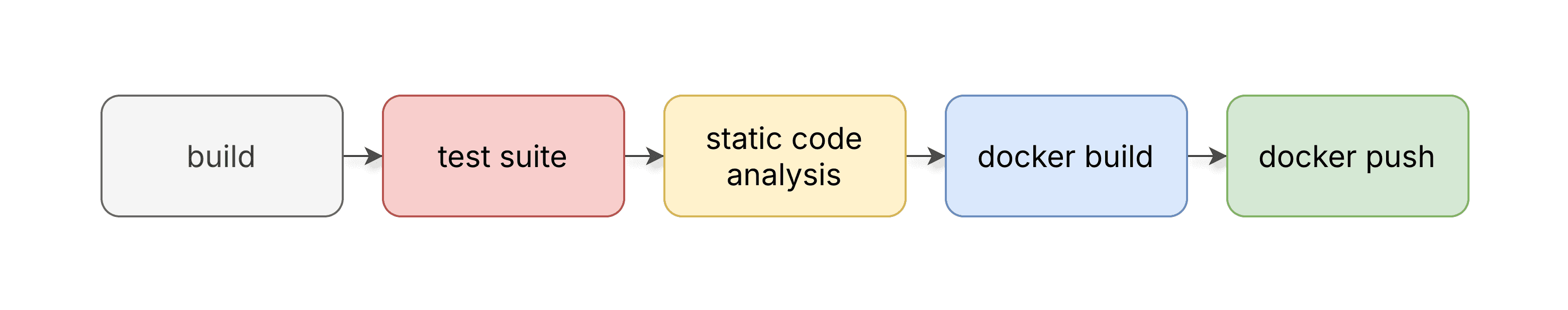

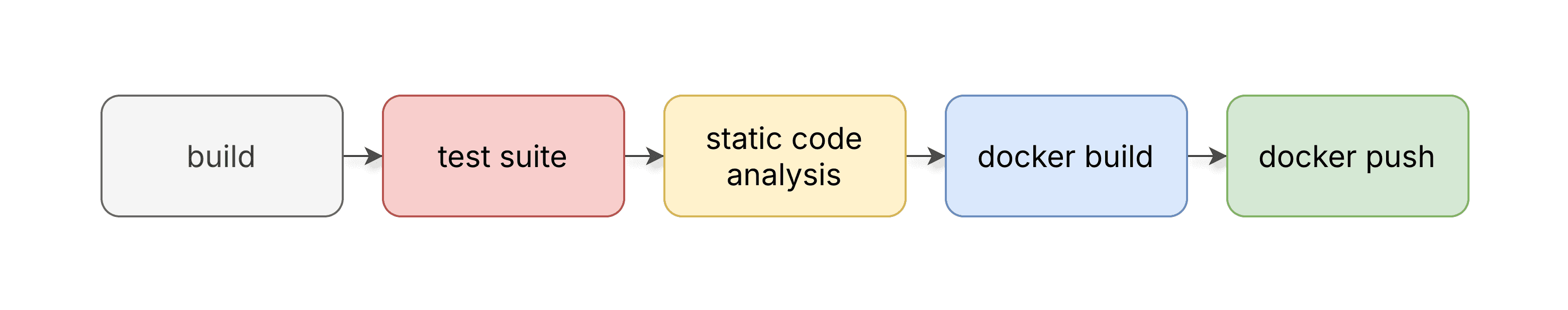

Continuous Integration is one of the pillars of modern DevOps. It encourages the practice of integrating code more frequently in turn reducing the time to market. Once the developer pushes code to feature/non-production branch, the typical process of continuous integration looks like below

If at any point there is a failure, all the subsequent steps are skipped and the overall status is marked as a failure. This helps the team to catch any issues early on and gives the confidence to merge the change into mainline code.

Continuous Integration != CI Tools

CI doesn’t enforce what tools need to be used. It talks about the process and not the implementation. Teams then can select the tools that fit their requirement and implement the process. One popular tool is Jenkins.

If you notice the CI process, some initial steps apply to the software development flow as well. Any developer would need to build the code and test it on their local system. Most likely the steps to build the code in local setup and CI setup would be the same. For running tests, depending on the quality and coverage of the tests, a developer might choose to run only specific tests related to the module they are working on. However, the CI environment will run the entire test suite to ensure no corner cases are failing.

The gap between Local and CI environment

One of the core principles of the DevOps movement is to reduce the gap between development and operations. Since CI environment is by nature separate from the developer’s local system. It does not provide the same ease of use to debug the pipeline if any failure happens. Developers might need to familiarise themselves with tools which CI systems provide. To avoid this friction, we need to build the pipelines in a way that allows the developer to focus on the problem itself without needing any special knowledge.

Bridging this gap with Makefiles and Bash script

When we were building a CI pipeline for one of our clients we solved this problem by sticking to good old tools Bash and Makefiles! We started by introducing Makefiles in all of the repositories. Every repository, irrespective of the language (Java/Go/Node), framework(Spring boot/Flink) had a Makefile with the following targets:

build: to build the code

test: to test the code

sca: source code analysis

build_docker_local: to build the docker image with the latest tag

default: echo "Use one of the - build|test|docker_build|docker_push|docker_cleanup|sca" gradle_build: /bin/bash ./build_scripts.sh gradle_build test: /bin/bash ./build_scripts.sh test #.... similar other steps

We created a single bash script with functions defined for each of these make targets. So essentially make command just invokes a bash function from the script but it provides a good standard to maintain across repositories. All the functions in this bash script are self-dependent and no other library or tool is required to invoke them. So a developer can easily invoke make commands to build and test on their local system.

#!/bin/bash if test -f "ci-helper.sh"; then source "ci-helper.sh" # If a helper file is found locally, read it. fi gradle_build() { #.. custom build step } test() { echo "Running Tests...." # custom test command } # No sca() defined, so it will be used from ci-helper.sh # Override the docker build command if needed # But use common docker tag format defined in ci-helper.sh build_docker_local() { docker build --rm --build-arg --tag "$ __docker_local_tag )" . } # Magic to keep all bash in single file! # Call the function supplied in the arguments $*

The ci-helper is another bash script we used to refactor some common CI related utilities to avoid duplicating everywhere. This file is only available in CI environment and this utility bash script is required (read ‘source’d) into the repository bash script. The bash functions inside the utility library call the repository-specific functions and provide a higher-level abstraction useful for CI. So for example, when the CI system does make docker_push it invokes the docker_push function defined by utility library which makes a call to docker_build_local defined by the repository-specific bash script and then tags the image appropriately, authenticates with private docker registry, and pushes it.

The only remaining part is using these makefiles in a CI tool. We used Jenkins, because that’s what the most developers were already familiar with and it served our purpose. But if you notice the Jenkinsfile, the steps are simple make calls. If we ever want to switch to any other CI tool, it’s just the matter of changing the tool itself. All the other details remain the same.

#/usr/bin/env groovy @Library('<jenkins-shared-repository>@release") // Preconfigured in Jenkins import com.example.Helper // Jenkins Shared Library import def gettockermageName) { return "custom-name" } def helper = new Helper(); pipeline { environment { GITHUB_TOKEN=credentials("xxxx") DOCKER_IMAGE_NAME=getDockerImageName() //Local overriden execution DOCKER_IMAGE_TAG=helper.getDockerTag(GIT_COMMIT, env. BRANCH_NAME ) DOCKER=getDockerEnv.yesNo(env.BRANCH_NAME) // Ask question based on branch } // .... stages { stage("Test") { steps { sh "make test" } } stage ("StaticCodeAnalysis") { steps { sh "make sca" } stage ("Build") { steps { sh "make gradle_build" } } stage ("Download Jenkins helper") { // Get commmon bash utility steps { getDockerHelper(GITHUB_TOKEN) } } stage ("BuildDocker") { steps { sh "make docker build" } } stage ("PushDocker") { steps { sh "make docker_push" } } } // .. }

Benefits

This way we have a solution for developers which allows them to use a standard format of building and testing code on their local system for any codebase throughout the organisation.

The CI system invokes the same approach that the developer is going to use while working on the codebase. This reduces the drift between the local system and CI environment commands.

The extensible approach allows the CI environment to be able to override some command-level options using Environment variables.

The utility library has all the common functions reusable, avoiding duplication and maintenance across all jobs.

Although we have used Jenkins, the simplicity of Makefile makes it completely transparent to switch to any other CI tool. Only the tools specific logic to call make target changes based on the tool.

As the devops movement tries to reduce the gap between the software development and operations, it emphasizes on the faster and reliable delivery of the software. However it doesn’t enforce any concrete implementation details. It explains the goal where we should aim but the journey can be unique for each organization. Continuous integration is the initial part of this journey. It enables developers to continuously merge their new features and bugfixes. Typically continuous integration happens in a separate environment than the one developer uses while building and testing the software locally but it still has these exact steps and more. If we put a little more thought into designing the right tooling, we can get single interface for building and testing the software which is independent of the CI tool and works across different environments.

In this post we expand on this idea, understand what are the benefits, and how we achieved this with Jenkins for one of our clients. If you’re an experienced DevOps practitioner feel free to skip first two sections which establish some background.

Infrastructure as code

Practices such as Infrastructure as code (IaC) promote managing parts of infrastructure using the similar practices we follow while developing application software. It brings many of the benefits of best practices that are followed in the application software development process. Some examples are

Using version control

Change review and approval

Distributing packages to other people that might be solving the same problems.

Writing tests!

Once we have moved to IaC, we now are back with the problems we faced to maintain the quality of the codebase. How do we avoid duplicating code written by multiple folks in the team, how do we design the codebase to be independent of the underlying tool?

Continuous Integration

Continuous Integration is one of the pillars of modern DevOps. It encourages the practice of integrating code more frequently in turn reducing the time to market. Once the developer pushes code to feature/non-production branch, the typical process of continuous integration looks like below

If at any point there is a failure, all the subsequent steps are skipped and the overall status is marked as a failure. This helps the team to catch any issues early on and gives the confidence to merge the change into mainline code.

Continuous Integration != CI Tools

CI doesn’t enforce what tools need to be used. It talks about the process and not the implementation. Teams then can select the tools that fit their requirement and implement the process. One popular tool is Jenkins.

If you notice the CI process, some initial steps apply to the software development flow as well. Any developer would need to build the code and test it on their local system. Most likely the steps to build the code in local setup and CI setup would be the same. For running tests, depending on the quality and coverage of the tests, a developer might choose to run only specific tests related to the module they are working on. However, the CI environment will run the entire test suite to ensure no corner cases are failing.

The gap between Local and CI environment

One of the core principles of the DevOps movement is to reduce the gap between development and operations. Since CI environment is by nature separate from the developer’s local system. It does not provide the same ease of use to debug the pipeline if any failure happens. Developers might need to familiarise themselves with tools which CI systems provide. To avoid this friction, we need to build the pipelines in a way that allows the developer to focus on the problem itself without needing any special knowledge.

Bridging this gap with Makefiles and Bash script

When we were building a CI pipeline for one of our clients we solved this problem by sticking to good old tools Bash and Makefiles! We started by introducing Makefiles in all of the repositories. Every repository, irrespective of the language (Java/Go/Node), framework(Spring boot/Flink) had a Makefile with the following targets:

build: to build the code

test: to test the code

sca: source code analysis

build_docker_local: to build the docker image with the latest tag

default: echo "Use one of the - build|test|docker_build|docker_push|docker_cleanup|sca" gradle_build: /bin/bash ./build_scripts.sh gradle_build test: /bin/bash ./build_scripts.sh test #.... similar other steps

We created a single bash script with functions defined for each of these make targets. So essentially make command just invokes a bash function from the script but it provides a good standard to maintain across repositories. All the functions in this bash script are self-dependent and no other library or tool is required to invoke them. So a developer can easily invoke make commands to build and test on their local system.

#!/bin/bash if test -f "ci-helper.sh"; then source "ci-helper.sh" # If a helper file is found locally, read it. fi gradle_build() { #.. custom build step } test() { echo "Running Tests...." # custom test command } # No sca() defined, so it will be used from ci-helper.sh # Override the docker build command if needed # But use common docker tag format defined in ci-helper.sh build_docker_local() { docker build --rm --build-arg --tag "$ __docker_local_tag )" . } # Magic to keep all bash in single file! # Call the function supplied in the arguments $*

The ci-helper is another bash script we used to refactor some common CI related utilities to avoid duplicating everywhere. This file is only available in CI environment and this utility bash script is required (read ‘source’d) into the repository bash script. The bash functions inside the utility library call the repository-specific functions and provide a higher-level abstraction useful for CI. So for example, when the CI system does make docker_push it invokes the docker_push function defined by utility library which makes a call to docker_build_local defined by the repository-specific bash script and then tags the image appropriately, authenticates with private docker registry, and pushes it.

The only remaining part is using these makefiles in a CI tool. We used Jenkins, because that’s what the most developers were already familiar with and it served our purpose. But if you notice the Jenkinsfile, the steps are simple make calls. If we ever want to switch to any other CI tool, it’s just the matter of changing the tool itself. All the other details remain the same.

#/usr/bin/env groovy @Library('<jenkins-shared-repository>@release") // Preconfigured in Jenkins import com.example.Helper // Jenkins Shared Library import def gettockermageName) { return "custom-name" } def helper = new Helper(); pipeline { environment { GITHUB_TOKEN=credentials("xxxx") DOCKER_IMAGE_NAME=getDockerImageName() //Local overriden execution DOCKER_IMAGE_TAG=helper.getDockerTag(GIT_COMMIT, env. BRANCH_NAME ) DOCKER=getDockerEnv.yesNo(env.BRANCH_NAME) // Ask question based on branch } // .... stages { stage("Test") { steps { sh "make test" } } stage ("StaticCodeAnalysis") { steps { sh "make sca" } stage ("Build") { steps { sh "make gradle_build" } } stage ("Download Jenkins helper") { // Get commmon bash utility steps { getDockerHelper(GITHUB_TOKEN) } } stage ("BuildDocker") { steps { sh "make docker build" } } stage ("PushDocker") { steps { sh "make docker_push" } } } // .. }

Benefits

This way we have a solution for developers which allows them to use a standard format of building and testing code on their local system for any codebase throughout the organisation.

The CI system invokes the same approach that the developer is going to use while working on the codebase. This reduces the drift between the local system and CI environment commands.

The extensible approach allows the CI environment to be able to override some command-level options using Environment variables.

The utility library has all the common functions reusable, avoiding duplication and maintenance across all jobs.

Although we have used Jenkins, the simplicity of Makefile makes it completely transparent to switch to any other CI tool. Only the tools specific logic to call make target changes based on the tool.

As the devops movement tries to reduce the gap between the software development and operations, it emphasizes on the faster and reliable delivery of the software. However it doesn’t enforce any concrete implementation details. It explains the goal where we should aim but the journey can be unique for each organization. Continuous integration is the initial part of this journey. It enables developers to continuously merge their new features and bugfixes. Typically continuous integration happens in a separate environment than the one developer uses while building and testing the software locally but it still has these exact steps and more. If we put a little more thought into designing the right tooling, we can get single interface for building and testing the software which is independent of the CI tool and works across different environments.

In this post we expand on this idea, understand what are the benefits, and how we achieved this with Jenkins for one of our clients. If you’re an experienced DevOps practitioner feel free to skip first two sections which establish some background.

Infrastructure as code

Practices such as Infrastructure as code (IaC) promote managing parts of infrastructure using the similar practices we follow while developing application software. It brings many of the benefits of best practices that are followed in the application software development process. Some examples are

Using version control

Change review and approval

Distributing packages to other people that might be solving the same problems.

Writing tests!

Once we have moved to IaC, we now are back with the problems we faced to maintain the quality of the codebase. How do we avoid duplicating code written by multiple folks in the team, how do we design the codebase to be independent of the underlying tool?

Continuous Integration

Continuous Integration is one of the pillars of modern DevOps. It encourages the practice of integrating code more frequently in turn reducing the time to market. Once the developer pushes code to feature/non-production branch, the typical process of continuous integration looks like below

If at any point there is a failure, all the subsequent steps are skipped and the overall status is marked as a failure. This helps the team to catch any issues early on and gives the confidence to merge the change into mainline code.

Continuous Integration != CI Tools

CI doesn’t enforce what tools need to be used. It talks about the process and not the implementation. Teams then can select the tools that fit their requirement and implement the process. One popular tool is Jenkins.

If you notice the CI process, some initial steps apply to the software development flow as well. Any developer would need to build the code and test it on their local system. Most likely the steps to build the code in local setup and CI setup would be the same. For running tests, depending on the quality and coverage of the tests, a developer might choose to run only specific tests related to the module they are working on. However, the CI environment will run the entire test suite to ensure no corner cases are failing.

The gap between Local and CI environment

One of the core principles of the DevOps movement is to reduce the gap between development and operations. Since CI environment is by nature separate from the developer’s local system. It does not provide the same ease of use to debug the pipeline if any failure happens. Developers might need to familiarise themselves with tools which CI systems provide. To avoid this friction, we need to build the pipelines in a way that allows the developer to focus on the problem itself without needing any special knowledge.

Bridging this gap with Makefiles and Bash script

When we were building a CI pipeline for one of our clients we solved this problem by sticking to good old tools Bash and Makefiles! We started by introducing Makefiles in all of the repositories. Every repository, irrespective of the language (Java/Go/Node), framework(Spring boot/Flink) had a Makefile with the following targets:

build: to build the code

test: to test the code

sca: source code analysis

build_docker_local: to build the docker image with the latest tag

default: echo "Use one of the - build|test|docker_build|docker_push|docker_cleanup|sca" gradle_build: /bin/bash ./build_scripts.sh gradle_build test: /bin/bash ./build_scripts.sh test #.... similar other steps

We created a single bash script with functions defined for each of these make targets. So essentially make command just invokes a bash function from the script but it provides a good standard to maintain across repositories. All the functions in this bash script are self-dependent and no other library or tool is required to invoke them. So a developer can easily invoke make commands to build and test on their local system.

#!/bin/bash if test -f "ci-helper.sh"; then source "ci-helper.sh" # If a helper file is found locally, read it. fi gradle_build() { #.. custom build step } test() { echo "Running Tests...." # custom test command } # No sca() defined, so it will be used from ci-helper.sh # Override the docker build command if needed # But use common docker tag format defined in ci-helper.sh build_docker_local() { docker build --rm --build-arg --tag "$ __docker_local_tag )" . } # Magic to keep all bash in single file! # Call the function supplied in the arguments $*

The ci-helper is another bash script we used to refactor some common CI related utilities to avoid duplicating everywhere. This file is only available in CI environment and this utility bash script is required (read ‘source’d) into the repository bash script. The bash functions inside the utility library call the repository-specific functions and provide a higher-level abstraction useful for CI. So for example, when the CI system does make docker_push it invokes the docker_push function defined by utility library which makes a call to docker_build_local defined by the repository-specific bash script and then tags the image appropriately, authenticates with private docker registry, and pushes it.

The only remaining part is using these makefiles in a CI tool. We used Jenkins, because that’s what the most developers were already familiar with and it served our purpose. But if you notice the Jenkinsfile, the steps are simple make calls. If we ever want to switch to any other CI tool, it’s just the matter of changing the tool itself. All the other details remain the same.

#/usr/bin/env groovy @Library('<jenkins-shared-repository>@release") // Preconfigured in Jenkins import com.example.Helper // Jenkins Shared Library import def gettockermageName) { return "custom-name" } def helper = new Helper(); pipeline { environment { GITHUB_TOKEN=credentials("xxxx") DOCKER_IMAGE_NAME=getDockerImageName() //Local overriden execution DOCKER_IMAGE_TAG=helper.getDockerTag(GIT_COMMIT, env. BRANCH_NAME ) DOCKER=getDockerEnv.yesNo(env.BRANCH_NAME) // Ask question based on branch } // .... stages { stage("Test") { steps { sh "make test" } } stage ("StaticCodeAnalysis") { steps { sh "make sca" } stage ("Build") { steps { sh "make gradle_build" } } stage ("Download Jenkins helper") { // Get commmon bash utility steps { getDockerHelper(GITHUB_TOKEN) } } stage ("BuildDocker") { steps { sh "make docker build" } } stage ("PushDocker") { steps { sh "make docker_push" } } } // .. }

Benefits

This way we have a solution for developers which allows them to use a standard format of building and testing code on their local system for any codebase throughout the organisation.

The CI system invokes the same approach that the developer is going to use while working on the codebase. This reduces the drift between the local system and CI environment commands.

The extensible approach allows the CI environment to be able to override some command-level options using Environment variables.

The utility library has all the common functions reusable, avoiding duplication and maintenance across all jobs.

Although we have used Jenkins, the simplicity of Makefile makes it completely transparent to switch to any other CI tool. Only the tools specific logic to call make target changes based on the tool.

As the devops movement tries to reduce the gap between the software development and operations, it emphasizes on the faster and reliable delivery of the software. However it doesn’t enforce any concrete implementation details. It explains the goal where we should aim but the journey can be unique for each organization. Continuous integration is the initial part of this journey. It enables developers to continuously merge their new features and bugfixes. Typically continuous integration happens in a separate environment than the one developer uses while building and testing the software locally but it still has these exact steps and more. If we put a little more thought into designing the right tooling, we can get single interface for building and testing the software which is independent of the CI tool and works across different environments.

In this post we expand on this idea, understand what are the benefits, and how we achieved this with Jenkins for one of our clients. If you’re an experienced DevOps practitioner feel free to skip first two sections which establish some background.

Infrastructure as code

Practices such as Infrastructure as code (IaC) promote managing parts of infrastructure using the similar practices we follow while developing application software. It brings many of the benefits of best practices that are followed in the application software development process. Some examples are

Using version control

Change review and approval

Distributing packages to other people that might be solving the same problems.

Writing tests!

Once we have moved to IaC, we now are back with the problems we faced to maintain the quality of the codebase. How do we avoid duplicating code written by multiple folks in the team, how do we design the codebase to be independent of the underlying tool?

Continuous Integration

Continuous Integration is one of the pillars of modern DevOps. It encourages the practice of integrating code more frequently in turn reducing the time to market. Once the developer pushes code to feature/non-production branch, the typical process of continuous integration looks like below

If at any point there is a failure, all the subsequent steps are skipped and the overall status is marked as a failure. This helps the team to catch any issues early on and gives the confidence to merge the change into mainline code.

Continuous Integration != CI Tools

CI doesn’t enforce what tools need to be used. It talks about the process and not the implementation. Teams then can select the tools that fit their requirement and implement the process. One popular tool is Jenkins.

If you notice the CI process, some initial steps apply to the software development flow as well. Any developer would need to build the code and test it on their local system. Most likely the steps to build the code in local setup and CI setup would be the same. For running tests, depending on the quality and coverage of the tests, a developer might choose to run only specific tests related to the module they are working on. However, the CI environment will run the entire test suite to ensure no corner cases are failing.

The gap between Local and CI environment

One of the core principles of the DevOps movement is to reduce the gap between development and operations. Since CI environment is by nature separate from the developer’s local system. It does not provide the same ease of use to debug the pipeline if any failure happens. Developers might need to familiarise themselves with tools which CI systems provide. To avoid this friction, we need to build the pipelines in a way that allows the developer to focus on the problem itself without needing any special knowledge.

Bridging this gap with Makefiles and Bash script

When we were building a CI pipeline for one of our clients we solved this problem by sticking to good old tools Bash and Makefiles! We started by introducing Makefiles in all of the repositories. Every repository, irrespective of the language (Java/Go/Node), framework(Spring boot/Flink) had a Makefile with the following targets:

build: to build the code

test: to test the code

sca: source code analysis

build_docker_local: to build the docker image with the latest tag

default: echo "Use one of the - build|test|docker_build|docker_push|docker_cleanup|sca" gradle_build: /bin/bash ./build_scripts.sh gradle_build test: /bin/bash ./build_scripts.sh test #.... similar other steps

We created a single bash script with functions defined for each of these make targets. So essentially make command just invokes a bash function from the script but it provides a good standard to maintain across repositories. All the functions in this bash script are self-dependent and no other library or tool is required to invoke them. So a developer can easily invoke make commands to build and test on their local system.

#!/bin/bash if test -f "ci-helper.sh"; then source "ci-helper.sh" # If a helper file is found locally, read it. fi gradle_build() { #.. custom build step } test() { echo "Running Tests...." # custom test command } # No sca() defined, so it will be used from ci-helper.sh # Override the docker build command if needed # But use common docker tag format defined in ci-helper.sh build_docker_local() { docker build --rm --build-arg --tag "$ __docker_local_tag )" . } # Magic to keep all bash in single file! # Call the function supplied in the arguments $*

The ci-helper is another bash script we used to refactor some common CI related utilities to avoid duplicating everywhere. This file is only available in CI environment and this utility bash script is required (read ‘source’d) into the repository bash script. The bash functions inside the utility library call the repository-specific functions and provide a higher-level abstraction useful for CI. So for example, when the CI system does make docker_push it invokes the docker_push function defined by utility library which makes a call to docker_build_local defined by the repository-specific bash script and then tags the image appropriately, authenticates with private docker registry, and pushes it.

The only remaining part is using these makefiles in a CI tool. We used Jenkins, because that’s what the most developers were already familiar with and it served our purpose. But if you notice the Jenkinsfile, the steps are simple make calls. If we ever want to switch to any other CI tool, it’s just the matter of changing the tool itself. All the other details remain the same.

#/usr/bin/env groovy @Library('<jenkins-shared-repository>@release") // Preconfigured in Jenkins import com.example.Helper // Jenkins Shared Library import def gettockermageName) { return "custom-name" } def helper = new Helper(); pipeline { environment { GITHUB_TOKEN=credentials("xxxx") DOCKER_IMAGE_NAME=getDockerImageName() //Local overriden execution DOCKER_IMAGE_TAG=helper.getDockerTag(GIT_COMMIT, env. BRANCH_NAME ) DOCKER=getDockerEnv.yesNo(env.BRANCH_NAME) // Ask question based on branch } // .... stages { stage("Test") { steps { sh "make test" } } stage ("StaticCodeAnalysis") { steps { sh "make sca" } stage ("Build") { steps { sh "make gradle_build" } } stage ("Download Jenkins helper") { // Get commmon bash utility steps { getDockerHelper(GITHUB_TOKEN) } } stage ("BuildDocker") { steps { sh "make docker build" } } stage ("PushDocker") { steps { sh "make docker_push" } } } // .. }

Benefits

This way we have a solution for developers which allows them to use a standard format of building and testing code on their local system for any codebase throughout the organisation.

The CI system invokes the same approach that the developer is going to use while working on the codebase. This reduces the drift between the local system and CI environment commands.

The extensible approach allows the CI environment to be able to override some command-level options using Environment variables.

The utility library has all the common functions reusable, avoiding duplication and maintenance across all jobs.

Although we have used Jenkins, the simplicity of Makefile makes it completely transparent to switch to any other CI tool. Only the tools specific logic to call make target changes based on the tool.

As the devops movement tries to reduce the gap between the software development and operations, it emphasizes on the faster and reliable delivery of the software. However it doesn’t enforce any concrete implementation details. It explains the goal where we should aim but the journey can be unique for each organization. Continuous integration is the initial part of this journey. It enables developers to continuously merge their new features and bugfixes. Typically continuous integration happens in a separate environment than the one developer uses while building and testing the software locally but it still has these exact steps and more. If we put a little more thought into designing the right tooling, we can get single interface for building and testing the software which is independent of the CI tool and works across different environments.

In this post we expand on this idea, understand what are the benefits, and how we achieved this with Jenkins for one of our clients. If you’re an experienced DevOps practitioner feel free to skip first two sections which establish some background.

Infrastructure as code

Practices such as Infrastructure as code (IaC) promote managing parts of infrastructure using the similar practices we follow while developing application software. It brings many of the benefits of best practices that are followed in the application software development process. Some examples are

Using version control

Change review and approval

Distributing packages to other people that might be solving the same problems.

Writing tests!

Once we have moved to IaC, we now are back with the problems we faced to maintain the quality of the codebase. How do we avoid duplicating code written by multiple folks in the team, how do we design the codebase to be independent of the underlying tool?

Continuous Integration

Continuous Integration is one of the pillars of modern DevOps. It encourages the practice of integrating code more frequently in turn reducing the time to market. Once the developer pushes code to feature/non-production branch, the typical process of continuous integration looks like below

If at any point there is a failure, all the subsequent steps are skipped and the overall status is marked as a failure. This helps the team to catch any issues early on and gives the confidence to merge the change into mainline code.

Continuous Integration != CI Tools

CI doesn’t enforce what tools need to be used. It talks about the process and not the implementation. Teams then can select the tools that fit their requirement and implement the process. One popular tool is Jenkins.

If you notice the CI process, some initial steps apply to the software development flow as well. Any developer would need to build the code and test it on their local system. Most likely the steps to build the code in local setup and CI setup would be the same. For running tests, depending on the quality and coverage of the tests, a developer might choose to run only specific tests related to the module they are working on. However, the CI environment will run the entire test suite to ensure no corner cases are failing.

The gap between Local and CI environment

One of the core principles of the DevOps movement is to reduce the gap between development and operations. Since CI environment is by nature separate from the developer’s local system. It does not provide the same ease of use to debug the pipeline if any failure happens. Developers might need to familiarise themselves with tools which CI systems provide. To avoid this friction, we need to build the pipelines in a way that allows the developer to focus on the problem itself without needing any special knowledge.

Bridging this gap with Makefiles and Bash script

When we were building a CI pipeline for one of our clients we solved this problem by sticking to good old tools Bash and Makefiles! We started by introducing Makefiles in all of the repositories. Every repository, irrespective of the language (Java/Go/Node), framework(Spring boot/Flink) had a Makefile with the following targets:

build: to build the code

test: to test the code

sca: source code analysis

build_docker_local: to build the docker image with the latest tag

default: echo "Use one of the - build|test|docker_build|docker_push|docker_cleanup|sca" gradle_build: /bin/bash ./build_scripts.sh gradle_build test: /bin/bash ./build_scripts.sh test #.... similar other steps

We created a single bash script with functions defined for each of these make targets. So essentially make command just invokes a bash function from the script but it provides a good standard to maintain across repositories. All the functions in this bash script are self-dependent and no other library or tool is required to invoke them. So a developer can easily invoke make commands to build and test on their local system.

#!/bin/bash if test -f "ci-helper.sh"; then source "ci-helper.sh" # If a helper file is found locally, read it. fi gradle_build() { #.. custom build step } test() { echo "Running Tests...." # custom test command } # No sca() defined, so it will be used from ci-helper.sh # Override the docker build command if needed # But use common docker tag format defined in ci-helper.sh build_docker_local() { docker build --rm --build-arg --tag "$ __docker_local_tag )" . } # Magic to keep all bash in single file! # Call the function supplied in the arguments $*

The ci-helper is another bash script we used to refactor some common CI related utilities to avoid duplicating everywhere. This file is only available in CI environment and this utility bash script is required (read ‘source’d) into the repository bash script. The bash functions inside the utility library call the repository-specific functions and provide a higher-level abstraction useful for CI. So for example, when the CI system does make docker_push it invokes the docker_push function defined by utility library which makes a call to docker_build_local defined by the repository-specific bash script and then tags the image appropriately, authenticates with private docker registry, and pushes it.

The only remaining part is using these makefiles in a CI tool. We used Jenkins, because that’s what the most developers were already familiar with and it served our purpose. But if you notice the Jenkinsfile, the steps are simple make calls. If we ever want to switch to any other CI tool, it’s just the matter of changing the tool itself. All the other details remain the same.

#/usr/bin/env groovy @Library('<jenkins-shared-repository>@release") // Preconfigured in Jenkins import com.example.Helper // Jenkins Shared Library import def gettockermageName) { return "custom-name" } def helper = new Helper(); pipeline { environment { GITHUB_TOKEN=credentials("xxxx") DOCKER_IMAGE_NAME=getDockerImageName() //Local overriden execution DOCKER_IMAGE_TAG=helper.getDockerTag(GIT_COMMIT, env. BRANCH_NAME ) DOCKER=getDockerEnv.yesNo(env.BRANCH_NAME) // Ask question based on branch } // .... stages { stage("Test") { steps { sh "make test" } } stage ("StaticCodeAnalysis") { steps { sh "make sca" } stage ("Build") { steps { sh "make gradle_build" } } stage ("Download Jenkins helper") { // Get commmon bash utility steps { getDockerHelper(GITHUB_TOKEN) } } stage ("BuildDocker") { steps { sh "make docker build" } } stage ("PushDocker") { steps { sh "make docker_push" } } } // .. }

Benefits

This way we have a solution for developers which allows them to use a standard format of building and testing code on their local system for any codebase throughout the organisation.

The CI system invokes the same approach that the developer is going to use while working on the codebase. This reduces the drift between the local system and CI environment commands.

The extensible approach allows the CI environment to be able to override some command-level options using Environment variables.

The utility library has all the common functions reusable, avoiding duplication and maintenance across all jobs.

Although we have used Jenkins, the simplicity of Makefile makes it completely transparent to switch to any other CI tool. Only the tools specific logic to call make target changes based on the tool.

In this post

In this post

Section

Section

Section

Section

Share

Share

Share

Share

In this post

test

Share

Keywords

homogenous build setups, continuous integration, CI/CD, local development, makefile, build automation, developer productivity, infrastructure as code, DevOps best practices, CI scripts, bash automation, Jenkinsfile, reusable pipelines, build system, one2n engineering, workflow standardization, automated testing, version control, team productivity, cloud CI